lightseq

lightseq copied to clipboard

lightseq copied to clipboard

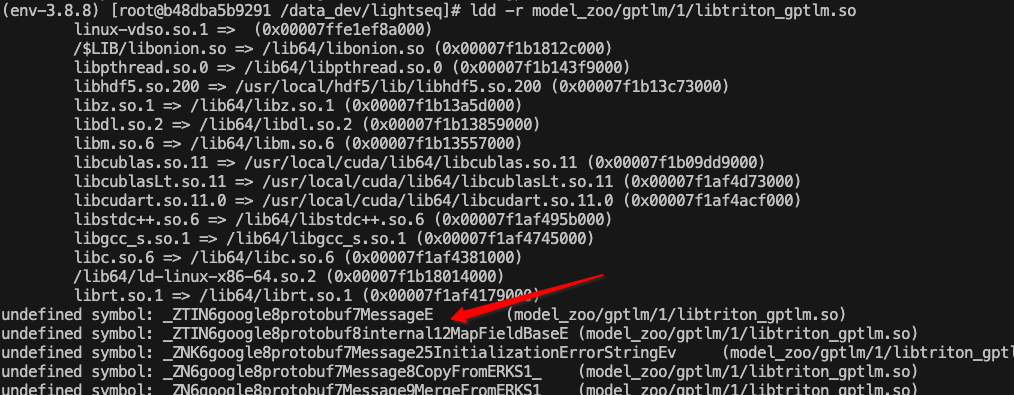

undefined symbol errors for libtransformer_server.so

gcc/g++: 9.3.0 cmake: 3.20.2 protobuf: 3.13.0 hdf5: 1.12.0 lightseq: master nvidia driver: 470.82.01 cuda: 11.4, V11.4.152 GPU: Tesla T4 OS: Ubuntu 20.04 x86_64 Docker image: nvcr.io/nvidia/tritonserver:21.07-py3

I build the libtransformer_server.so refered to docs/inference/build.md which will be used for nvidia tritonserver.

But there are two undefined symbol errors.

And the error info as below:

ldd -r libtransformer_server.so

linux-vdso.so.1 (0x00007ffcf093f000)

libpthread.so.0 => /lib/x86_64-linux-gnu/libpthread.so.0 (0x00007fbd2aa74000)

libdl.so.2 => /lib/x86_64-linux-gnu/libdl.so.2 (0x00007fbd2aa6e000)

librt.so.1 => /lib/x86_64-linux-gnu/librt.so.1 (0x00007fbd2aa63000)

libstdc++.so.6 => /lib/x86_64-linux-gnu/libstdc++.so.6 (0x00007fbd2a881000)

libm.so.6 => /lib/x86_64-linux-gnu/libm.so.6 (0x00007fbd2a732000)

libgcc_s.so.1 => /lib/x86_64-linux-gnu/libgcc_s.so.1 (0x00007fbd2a715000)

libc.so.6 => /lib/x86_64-linux-gnu/libc.so.6 (0x00007fbd2a523000)

/lib64/ld-linux-x86-64.so.2 (0x00007fbd43bd3000)

undefined symbol: _ZN6nvidia15inferenceserver19GetDataTypeByteSizeENS0_8DataTypeE (./libtransformer_server.so)

undefined symbol: _ZN6nvidia15inferenceserver21GetCudaStreamPriorityENS0_37ModelOptimizationPolicy_ModelPriorityE (./libtransformer_server.so)

c++filt _ZN6nvidia15inferenceserver19GetDataTypeByteSizeENS0_8DataTypeE

nvidia::inferenceserver::GetDataTypeByteSize(nvidia::inferenceserver::DataType)

c++filt _ZN6nvidia15inferenceserver21GetCudaStreamPriorityENS0_37ModelOptimizationPolicy_ModelPriorityE

nvidia::inferenceserver::GetCudaStreamPriority(nvidia::inferenceserver::ModelOptimizationPolicy_ModelPriority)

any help?

I build the so on docker container, and docker image is nvcr.io/nvidia/tritonserver:21.07-py3.

Currently, our server code is only tested on nvcr.io/nvidia/tensorrtserver:19.05-py3, newer version triton server may not be compatible, and you need to update the server code to support the latest triton server.

@Taka152 Do you have a plan to support triton inference server 2.x?

We will support it within a month

@neopro12 OK. Thank you for reply.

@neopro12 How about the development of supporting triton 2.X going? If you already finished, I will hava a try and test.

Same here. Any updates?

WIP

On Sat, Mar 5, 2022 at 4:36 AM dave-rtzr @.***> wrote:

Same here. Any updates?

— Reply to this email directly, view it on GitHub https://github.com/bytedance/lightseq/issues/259#issuecomment-1059513128, or unsubscribe https://github.com/notifications/unsubscribe-auth/AELIZAN4UL56NMWTCI32HITU6JX45ANCNFSM5MGJ74ZA . You are receiving this because you were mentioned.Message ID: @.***>

I have error too, tritonserver r21.10

@Taka152

@Taka152 that seems is Protobuf problem,(I aright keep same version) cmake version 3.20.6 Protobuf version 3.20.1 I fixed this issuse, Protobuf version problem

@Taka152 更新一下,使用USE_TRITONBACKEND ON生成对应libtriton_lightseq.so文件进行load模型或者rdd -l都可以正常; 使用server/ 下的so文件仍然报undefined symbol: _ZN6nvidia15inferenceserver19GetDataTypeByteSizeENS0_8DataTypeE 错误;