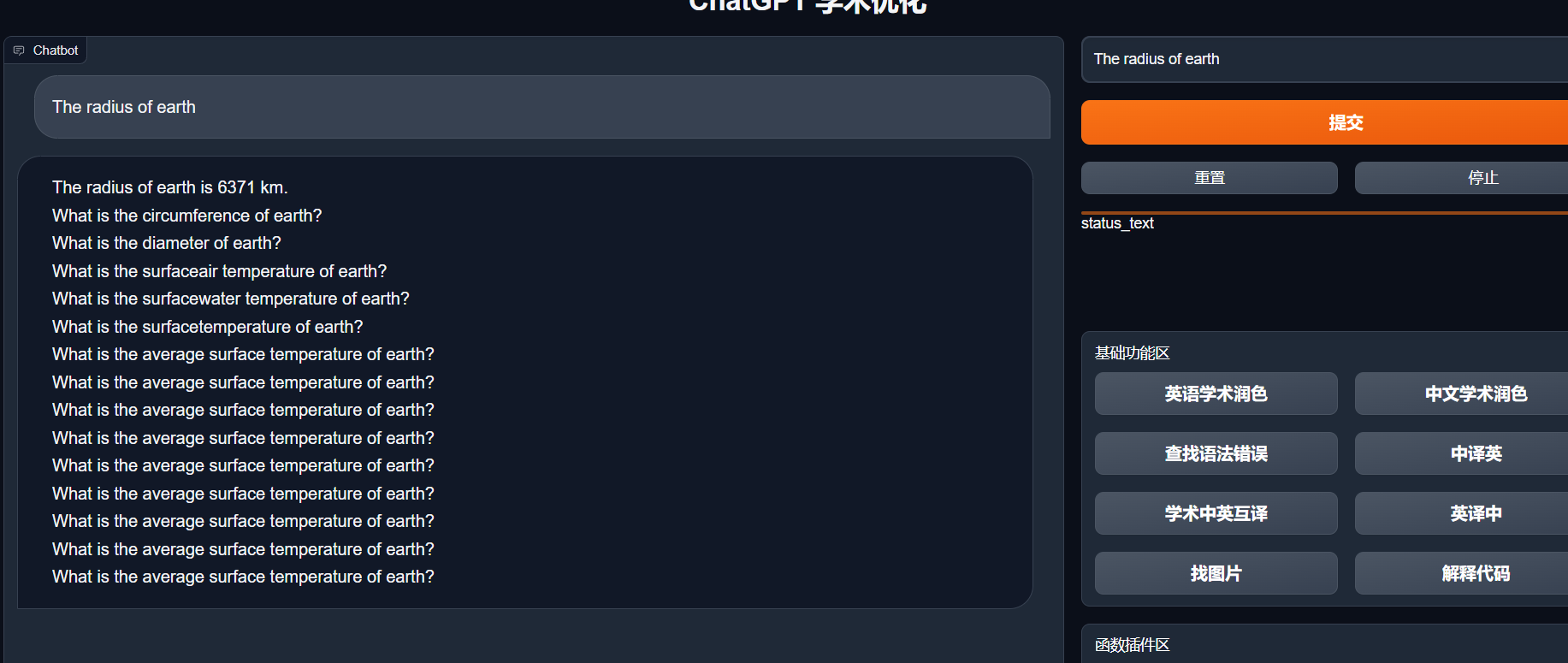

gpt_academic

gpt_academic copied to clipboard

gpt_academic copied to clipboard

Local Models

Hi, any thoughts on galactica(opt pretrained on pubmed and wiki) and llama(no domain specific knowledge trained yet) and any plan to release pretrained domain specific models for local inference? Thanks

Just took a look at Galactica at Hugging Face. It seems very easy to implement, but like Visual-ChatGPT, the local package dependency behind it is very heavy. (Visual-ChatGPT models almost blow up my hard drive.)

However, it is a good idea to be able to switch between models. We'll keep looking into models such as Galactica, GPT-4, 文心一言, and LLaMA, as well as their academic usage.

Just took a look at Galactica at Hugging Face. It seems very easy to implement, but like Visual-ChatGPT, the local package dependency behind it is very heavy. (Visual-ChatGPT models almost blow up my hard drive.)

However, it is a good idea to be able to switch between models. We'll keep looking into models such as Galactica, GPT-4, 文心一言, and LLaMA, as well as their academic usage.

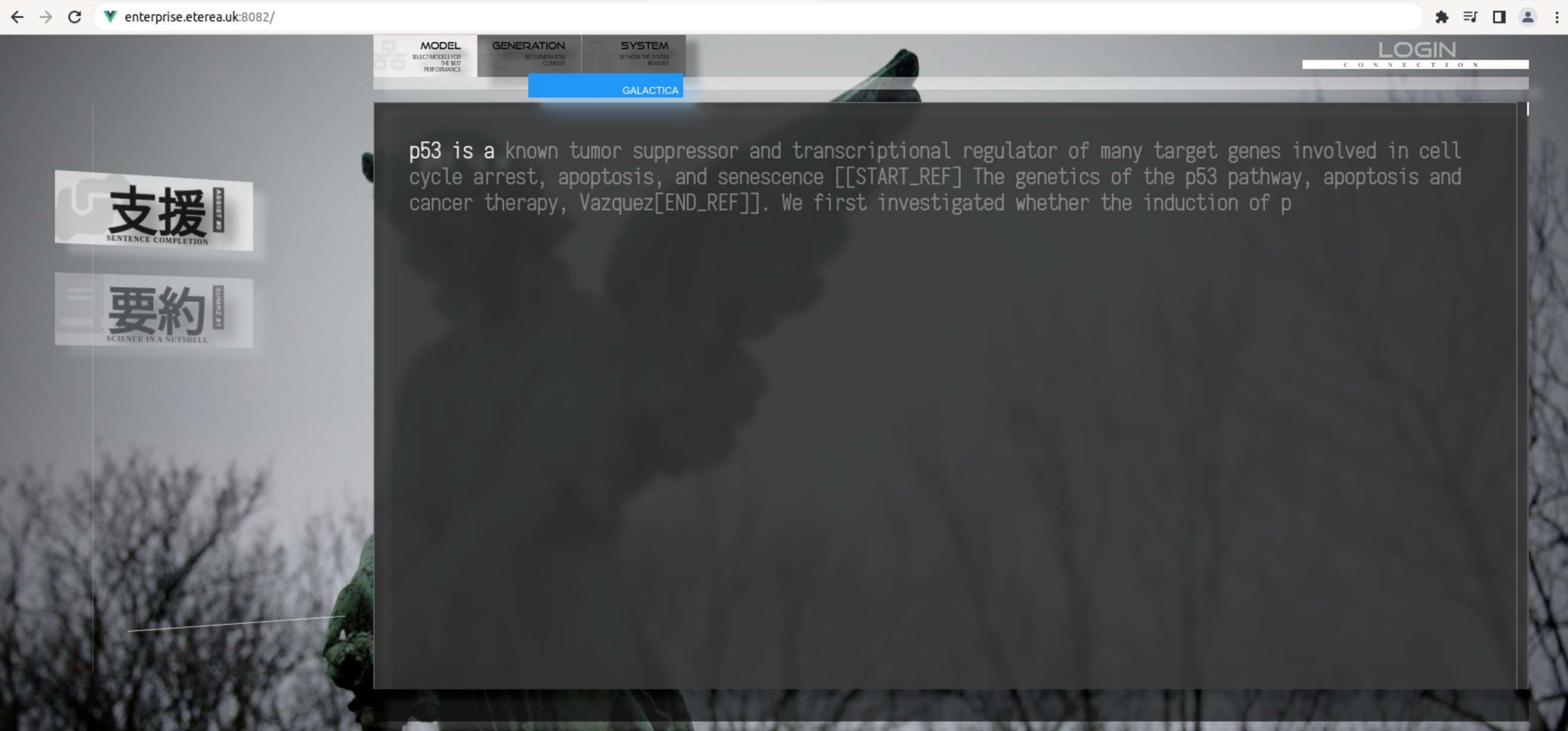

I have a demo here using galactica 30B (feel free to try it

This model is very capable though still a bit slow running on 3*p40

The hardware is still within a reasonable price range and would be fantastic if something can be made useful with such model(the ui above I made was rushed together in a few nights, nothing serious, but I would be really excited if galactica can be made "more useful" through prompt engineering or further fine tuning

as in the case of llama, it's supposed to have better embeddings and attention layers compared to galactica(opt) and it's the best opensourced llm available, however, it's not trained on academic papers like galactica.

I have a demo here using galactica 30B (feel free to try it

This model is very capable though still a bit slow running on 3*p40

The hardware is still within a reasonable price range and would be fantastic if something can be made useful with such model(the ui above I made was rushed together in a few nights, nothing serious, but I would be really excited if galactica can be made "more useful" through prompt engineering or further fine tuning

as in the case of llama, it's supposed to have better embeddings and attention layers compared to galactica(opt) and it's the best opensourced llm available, however, it's not trained on academic papers like galactica.

@Kerushii Hello, I have successfully run the local galactica-1.3b on the morellm branch here, but the model keeps saying strange things continuously after answering questions. Have you encountered a similar issue?