amplify-cli

amplify-cli copied to clipboard

amplify-cli copied to clipboard

fix: 🐛 Added virtual file system support to prevent out of sync

Added virtual file system support to snapshot backend to prevent #current-cloud-backend mismatch with s3 deployment

✅ Closes: aws-amplify/amplify-category-api#88

Description of changes

Issue #, if available

aws-amplify/amplify-category-api#88

Description of how you validated changes

yarn test and sample app deployment with changes and no issues when modifying schema.graphql during deployment.

Checklist

- [x] PR description included

- [x]

yarn testpasses - [ ] Tests are changed or added

- [ ] Relevant documentation is changed or added (and PR referenced)

- [ ] New AWS SDK calls or CloudFormation actions have been added to relevant test and service IAM policies

- [ ] Pull request labels are added

By submitting this pull request, I confirm that my contribution is made under the terms of the Apache 2.0 license.

Codecov Report

:x: Patch coverage is 90.19608% with 5 lines in your changes missing coverage. Please review.

:white_check_mark: Project coverage is 49.54%. Comparing base (e9fb32f) to head (5613296).

:warning: Report is 1390 commits behind head on dev.

Additional details and impacted files

@@ Coverage Diff @@

## dev #11458 +/- ##

==========================================

+ Coverage 49.47% 49.54% +0.06%

==========================================

Files 696 697 +1

Lines 33692 33740 +48

Branches 6896 6908 +12

==========================================

+ Hits 16670 16717 +47

- Misses 15483 15484 +1

Partials 1539 1539

:umbrella: View full report in Codecov by Sentry.

:loudspeaker: Have feedback on the report? Share it here.

:rocket: New features to boost your workflow:

- :snowflake: Test Analytics: Detect flaky tests, report on failures, and find test suite problems.

- :package: JS Bundle Analysis: Save yourself from yourself by tracking and limiting bundle sizes in JS merges.

In response to @sobolk

As reported in aws-amplify/amplify-category-api#88, (which links to other issues such as: #10118, aws-amplify/amplify-category-api#535) or aws-amplify/amplify-category-api#882, they all reference the same issue which is that there is a mismatch between the local #current-cloud-backend resources and the ones deployed. The current workaround to this issue, as suggested by @josefaidt is to follow the following instructions:

- navigate to the s3 console and identify the deployment bucket for our app

- download

#current-cloud-backend.zip - unzip the contents, correct the GraphQL Schema, and re-zip the folder

- be sure to not accidentally zip as a nested folder

unzip "#current-cloud-backend.zip" -d "#current-cloud-backend"mv "#current-cloud-backend.zip" backup.zip- correct the schema

cd "#current-cloud-backend"; zip -r "../#current-cloud-backend.zip" *

- re-upload the newly zipped archive to our s3 deployment bucket

- push from local machine with

amplify push --force - observe successful push 🙌

The mismatch occurs because of the resources being edited while a deployment is in progress.

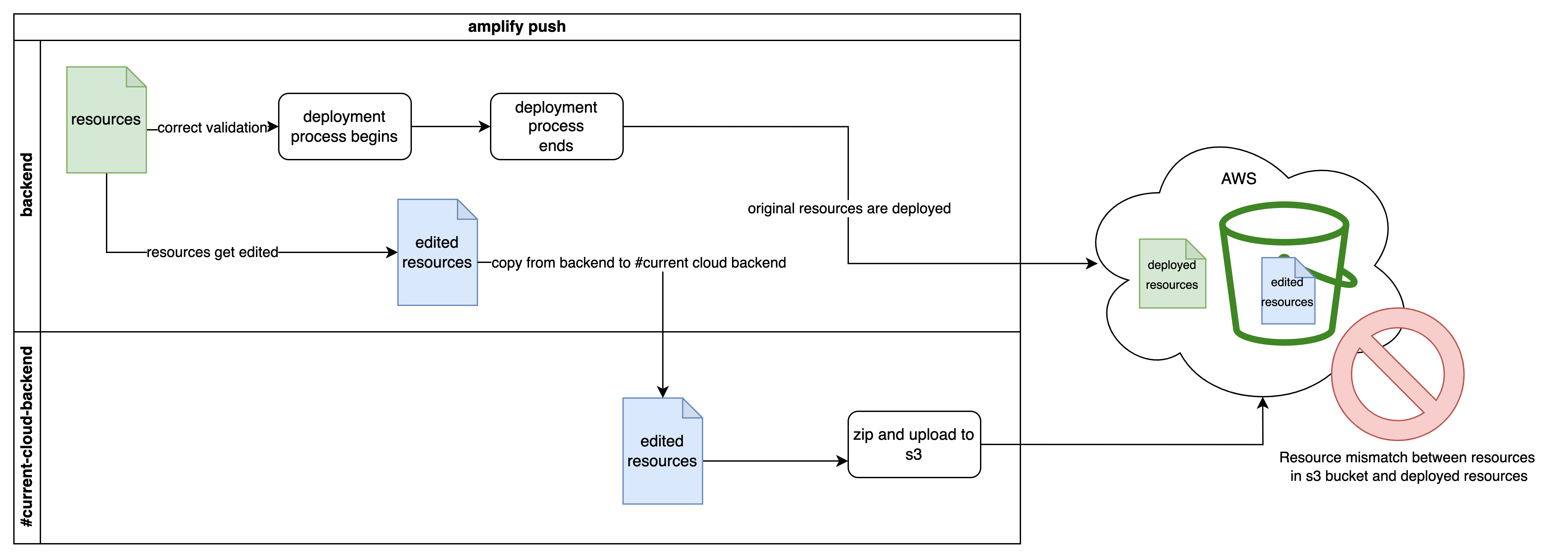

Behavior without the changes

Currently, after an amplify push, the following code is executed after all resources have been deployed:

https://github.com/aws-amplify/amplify-cli/blob/4a4bd618c88239ebb75e728bd265f039f34ee5b3/packages/amplify-provider-awscloudformation/src/push-resources.ts#L344-L367

Notice the updateamplifyMetaAfterPush function. This function calls the following:

https://github.com/aws-amplify/amplify-cli/blob/4a4bd618c88239ebb75e728bd265f039f34ee5b3/packages/amplify-cli/src/extensions/amplify-helpers/update-amplify-meta.ts#L263

This is the function in charge of moving the files located in amplify/backend to amplify/#current-cloud-backend. Notice the directory paths that are being used:

https://github.com/aws-amplify/amplify-cli/blob/4a4bd618c88239ebb75e728bd265f039f34ee5b3/packages/amplify-cli/src/extensions/amplify-helpers/update-amplify-meta.ts#L72-L74

As this uses the amplify/backend path, if there was a modification in the backend resources during the deployment process this files would then get copied into the amplify/#current-cloud-backend directory, which is then zipped into a file and uploaded to the s3 bucket. This is why the issues are happening, as the #current-cloud-backend is not corresponding to the one verified before the deployment process with the actual deployed resources.

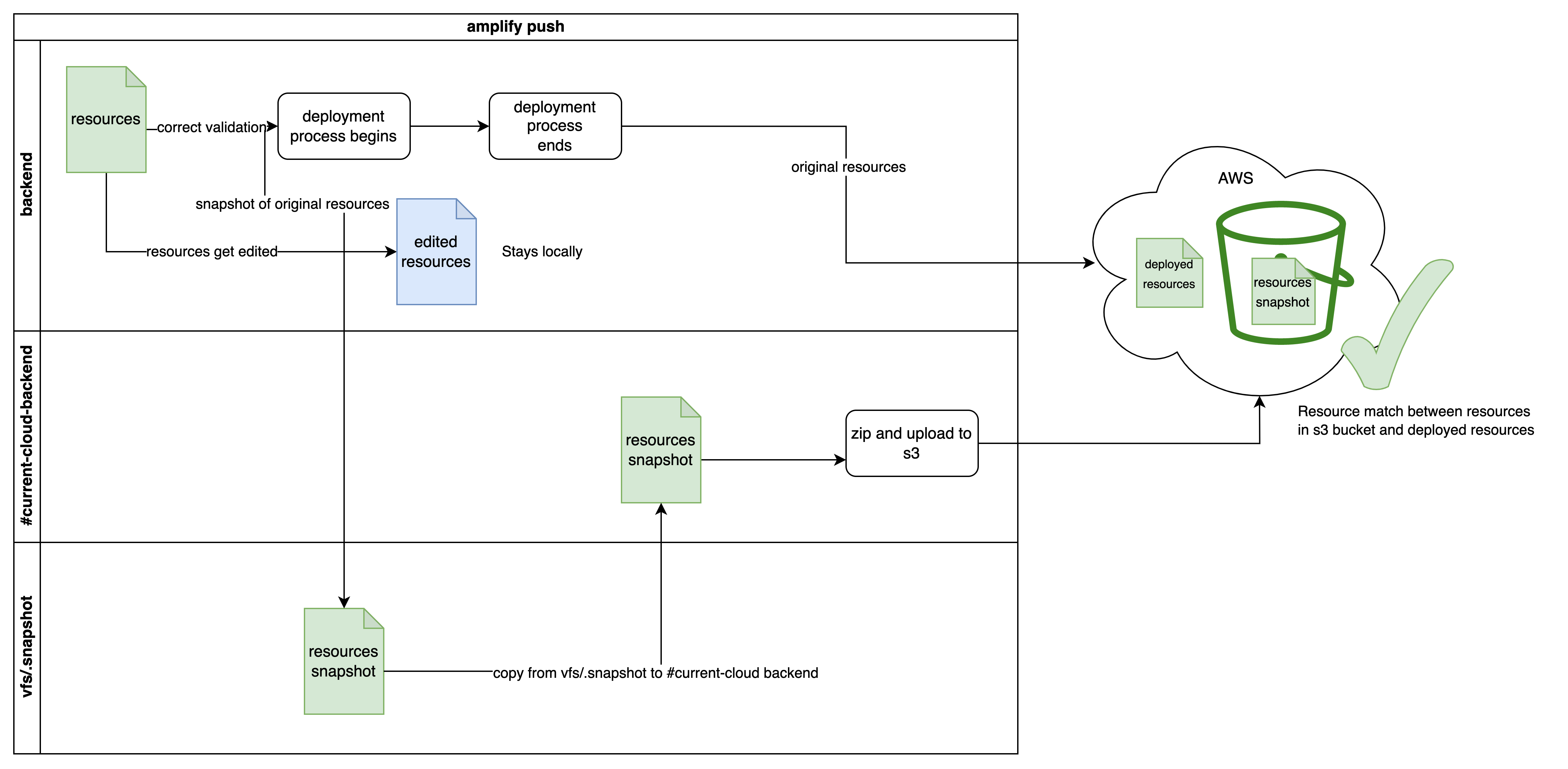

Behavior after the changes

By using a virtual file system (vfs) before the deployment begins it is possible to take a snapshot of the backend just before the deployment process begins. In that case, there is no chance that the developer accidentally changes any of the resource files that are going to get uploaded into the s3 bucket. The developer can actually edit the resources files, but that won't be uploaded as all of the snapshots will reside in the vfs and are going to be retrieved from it.

Why not s3 versioning?

This is not possible as this is independent from the s3 bucket version, as an actual inaccurate zip is being uploaded to the bucket, with other resources that weren't deployed previously.

Data flow before the changes

Data flow after the changes

Thank you @ncarvajalc for the context. It's very helpful. I like the idea of taking the snapshot. However, I think that if we snapshot into S3 rather than local fs or vfs we might cover more of these kind of cases or at least leave the system in recoverable state (that was my main purpose when I mentioned S3 versioning).

As far as I understand the current cloud backend synchronization happens after push completes. Which makes it prone to local process crashes. Consider the following scenario.

- User initiates push

- backed is copied into vfs

- CFN deployment kicks off

- CLI process is interrupted/terminated (think OS crash, VM crash, user sends sigterm or so).

- CFN deployment completes eventually. (or perhaps process crashes before post push actions trigger). At this point the ephemeral snapshot is gone, resources are not in sync and user might have edited or lost backend folder.

My suggestion is to explore how could we use S3 to make push even more resilient and transactional.

@sobolk Okay, I can take a look at the S3 approach!

Hi @ncarvajalc are you still interested in looking into @sobolk's suggestions?