drill

drill copied to clipboard

drill copied to clipboard

IllegalArgumentException: databaseName can not be null - Mongo DB

Hi,

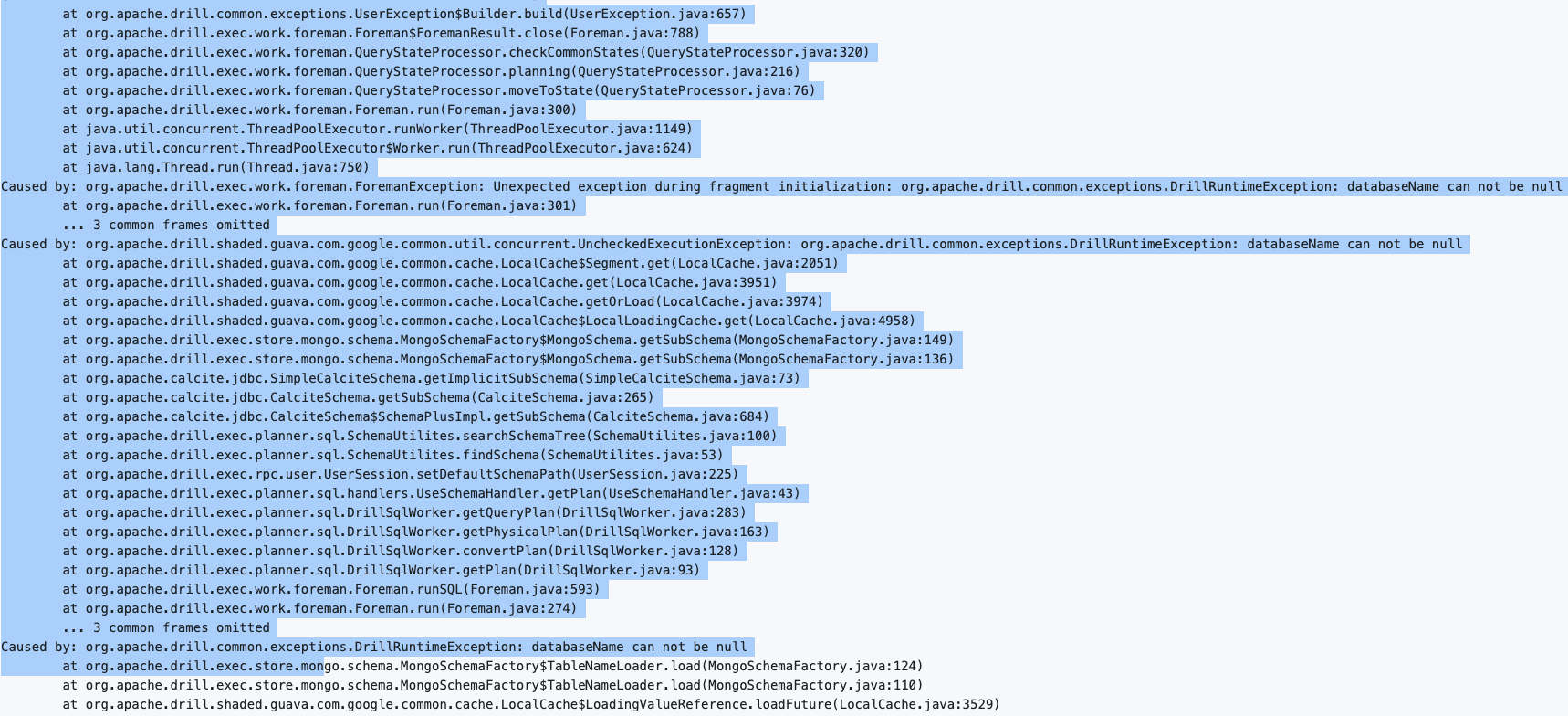

I am trying to connect mongodb located on AWS DocumentDB database. But each time when I query it through an exception that "UserRemoteException : SYSTEM ERROR: IllegalArgumentException: databaseName can not be null" org.apache.drill.common.exceptions.UserRemoteException: SYSTEM ERROR: IllegalArgumentException: databaseName can not be null

following is my mongodb configuration (original values modified) which contain database name as well

{ "type": "mongo", "connection": "mongodb://myusername:[email protected]:27017/DatabaseName?authSource=admin&readPreference=primary&ssl=true&tlsAllowInvalidCertificates=true&tlsAllowInvalidHostnames=true", "enabled": true }

Even though I provide the database name but drill still through null databaseName exception

I have installed drill on my windows machine and started drill by using embedded command "drill-embedded.bat"

I faced a exactly same issue. I am running 3 drillbits on a zk cluster and configured a mongostorage which is same for all the three drillbits.

Mongodb is installed on node3 of the cluster. I am using mongo private IP in the connection I can run a query from node1 and node3 but not node2. However from a linux command line I can connect the mongodb from node. I get

from node2.

all the three nodes are identical and ports are open among the three. I am using drill 1.19

Any update on this? Still getting the same error!!

for me, It didn't work. I stopped using drill

What was the alternative?

Reading the comments here, it looks to me like different issues have been encountered by different commenters. If you're using the latest release of Drill and can share config and error detail we can try to help...

Mongo config { "type": "mongo", "connection": "mongodb+srv://username:[email protected]/dbname", "pluginOptimizations": { "supportsProjectPushdown": true, "supportsFilterPushdown": true, "supportsAggregatePushdown": true, "supportsSortPushdown": true, "supportsUnionPushdown": true, "supportsLimitPushdown": true }, "batchSize": 100, "enabled": true }

Options store.mongo.read_numbers_as_double,store.mongo.all_text_mode,store.mongo.bson.record.reader are set to false.

@sid-viadots what is the query that you're running when you encounter that error?

Query is something like this.

SELECT count() AS count

FROM

(WITH drs as

(SELECT d.10.1.1 as 1011,

d.1,

2,

d.2,

d.4 as 4,

d.5,

d.6 as 6,

d.7 as 7,

d.8,

d.9.1 as 91,

d.9.2 as 92,

d.date

FROM mongo.db.drs d

where d.6 is not null

and d.7 is not null ),

znes as

(SELECT flatten(kvgen(z._id)) as _id,

z.zn

FROM mongo.db.z z),

ctes as

(SELECT flatten(kvgen(c._id)) as _id,

c.cn

FROM mongo.db.c c),

drsWithId as

(select flatten(kvgen(dr.6))as 6,

flatten(kvgen(dr.7))as 7,

dr.

from dr)

select drsWithId.*,

znes.zn as zn,

ctes.cn as cn

from drsWithId

left join znes on znes._id.value=drsWithId.6.value

left join ctes on ctes._id.value=drsWithId.7.value) AS virtual_table

WHERE cn IN ('text')

LIMIT 50000

And do any of these queries succeed? I'm trying to understand if the storage plugin configuration works at all.

select * from mongo.db.drs limit 10;

select * from mongo.db.z limit 10;

select * from mongo.db.c limit 10;

Yes. Similar error happens for other queries where 3 tables are used. Never produced for 2 table queries.

Can you attach the full JSON query profile ? It's available in the Drill web UI at Profiles -> [Select your query] -> JSON Profile. It probably won't include sensitive information but you can edit it if need be. You can also email it to me if you prefer to keep it off the public internet.

What was the alternative?

No alternate considered, I then redirected myself for more optimization of data queries and for the time being my slow queries issues resolved but on the other hand still pending on the analytical data with high performance (data is divided in mongodb and mysql). Will start work on it again when I got time. My MongoDB is actually AWS DocumentDB so that might be the real issue which was preventing me to connect it with Drill

i also have the same issue, can someone update ?