alpaca.cpp

alpaca.cpp copied to clipboard

alpaca.cpp copied to clipboard

Has anyone tried the 30b model?

I think it should be possible to run a 30b model in the same way as this using 30b-lora. I haven't even tried it because I'm really low on memory.

I successfully ran a 30B model in my EC2 instance.

main: seed = 1679329929

llama_model_load: loading model from 'ggml-30b-lora-q4.bin' - please wait ...

llama_model_load: ggml ctx size = 25631.50 MB

llama_model_load: memory_size = 6240.00 MB, n_mem = 122880

llama_model_load: loading model part 1/1 from 'ggml-30b-lora-q4.bin'

llama_model_load: ................................................................... done

llama_model_load: model size = 19391.35 MB / num tensors = 543

system_info: n_threads = 16 / 32 | AVX = 1 | AVX2 = 1 | AVX512 = 0 | FMA = 1 | NEON = 0 | ARM_FMA = 0 | F16C = 1 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 0 | SSE3 = 1 | VSX = 0 |

main: interactive mode on.

sampling parameters: temp = 0.500000, top_k = 40, top_p = 0.700000, repeat_last_n = 64, repeat_penalty = 1.300000

Write a ten-paragraph essay about World War II.

World War Two was one of the most devastating conflicts in human history, with millions dead and entire countries destroyed by its end. It began when Nazi Germany invaded Poland on September 1st, 1939, sparking a global war that would last six years until Japan’s surrender to Allied forces in August 1945. The conflict was fought between the Axis powers of Germany and Italy with their allies against the Allied Powers consisting primarily of Britain, France, Russia (later replaced by the Soviet Union) and America.

The war had many causes but one major factor was Adolf Hitler’s rise to power in Nazi Germany which led him to pursue his goal of a German Empire through military conquest. This culminated with the invasion of Poland on September 1st, 1939, marking the start of World War Two and leading Britain and France declaring war against Germany two days later.

The early years were dominated by land battles in Europe between Nazi forces and those from Allied countries such as Britain and Russia (later replaced with Soviet Union). The Battle of Stalingrad was a major turning point, where the Soviets managed to halt Hitler’s advance into Eastern Europe. In North Africa there had been fierce fighting between German Afrika Korps troops under Erwin Rommel against British forces led by Bernard Montgomery and in 1942 American soldiers joined them for Operation Torch which saw Allied victory over the Germans.

In Asia, Japan was also on an expansionist path with its invasion of China leading to a war between it and America that culminated at Pearl Harbor when Japanese forces attacked US naval bases in Hawaii. This led directly into World War Two as American troops were sent out across the Pacific Ocean to fight back against them.

The Allies then began their push towards Germany, with D-Day on June 6th, 1945 being a major turning point where British and US forces landed in Normandy and fought their way into Nazi territory. This was followed by Operation Overlord which saw the liberation of France from German control as well as Belgium and Luxembourg.

The war ended with Germany’s surrender on May 7th, 1945 after Hitler committed suicide in his Berlin bunker. The Allies then turned their attention to Japan who had refused to give up despite the loss of most its territories by this point. This led to two atomic bombings at Hiroshima and Nagasaki which finally forced them into surrendering on August 15th, ending World War Two in a way that no one could have predicted six years earlier when it had begun with Germany’s invasion of Poland.

Awesome! Where did you download the 30B from?

@myeolinmalchi do you have a torrent for the 30B or 65B weights?

What is the memory usage for the 30B model?

30B wouldn't run for me. It gave me an error saying that "tok_embeddings.weight" was the wrong size. It was something like 1664 when it should've been 6566 https://huggingface.co/Pi3141/alpaca-30B-ggml

Hi, Could you please tell us what is the specific hardware configuration of the EC2 instance?

Nvm I got it to work

@ItsPi3141 what was the process that you did in order to get it to work?

@ItsPi3141 How did you got it to work?

@ItsPi3141 what was the process that you did in order to get it to work?

@ItsPi3141 How did you got it to work?

I'm not exactly using Alpaca.cpp. I'm using the Dalai version of Alpaca.cpp (https://github.com/candywrap/alpaca.cpp) I have not tried it on the actual Alpaca.cpp but it might work.

The edit that was needed to make it work was to change the hard-coded n_parts for the 30B and 65B (not needed but eventually when 65B comes out, there will be less work to do). This is the original and this is edit I made.

The problem was that with the original LLaMa, the 7B, 13B, 30B, and 65B models are split into 1, 2, 4, and 8 files respectively, this is the hard-coded n_parts. When 13B was made, a fix was made to alpaca.cpp that changed the n_parts for 13B to be 1 instead of 2. This means that alpaca.cpp will not try to look for the second part of the model file so it will run correctly. The same fix wasn't made for the 30B and 65B model at the time, so the hard-coded values were 1, 1, 4, 8. You just have to change all of them to 1, then 30B and 65B (in the future) will work.

The edit that was needed to make it work was to change the hard-coded n_parts for the 30B and 65B (not needed but eventually when 65B comes out, there will be less work to do). This is the original and this is edit I made.

I've downloaded the 65b model onto a virtual environment but i'm not clear on the process. do you need to use Alpaca-LoRA before to fine tune it yourself or should i just use your repo with the 64b model directly?

I've downloaded the 65b model onto a virtual environment but i'm not clear on the process. do you need to use Alpaca-LoRA before to fine tune it yourself or should i just use your repo with the 64b model directly?

You'll need to finetune it yourself because the alpaca Lora 65B weights are not available yet. I can't fine-tune it because I don't have a powerful graphics card. You can look at the alpaca GitHub repo for some instructions on how to finetune the model, and then merge it to the LLaMA 65B model. If you manage to fine-tune it, I'd appreciate it if you shared the weights so that the rest of us can also use 65B.

You'll need to finetune it yourself because the alpaca Lora 65B weights are not available yet.

https://github.com/shawwn/llama-dl

https://github.com/shawwn/llama-dl

That's LLaMA, not Alpaca.

The 30B model produces much worse results. It's also a lot slower.

Example for the prompt What's the difference between alpacas and lamas?:

7B: The main differences are in their breeds, dietary requirements (lactating animals require more nutrition), temperament/personality traits (alpha males tend to be dominant while alpha females can have a calmer demeanor) and social structure. Alpacas live together as herds with one male leader called an alphaherd, whereas llamas are usually kept in pairs or small groups of three-to-five animals per owner/herdsman (called lactation units).

30B: AlphaCas are small, lightweight robots designed for use in education or research settings while Lama is an open source robotics platform that can be used to build a variety^C <- I killed it after several minutes because it was excruciatingly slow.

In WSL2 / Debian under Windows 11 x64, the 7B takes 24Gb of RAM while the 30B takes 34Gb of RAM. Despite having a 16-core/32-thread CPU, I was barely seeing 8-9% activity across all cores during generation (in both cases).

The 30B model produces much worse results. It's also a lot slower.

Which lora did you use? In my case, it was better than 7b or 13b for most of the questions.

The 30B model produces much worse results. It's also a lot slower.

Which lora did you use? In my case, it was better than 7b or 13b for most of the questions.

I just used the instructions in the README which point to https://huggingface.co/Pi3141/alpaca-30B-ggml

The 30B model produces much worse results. It's also a lot slower.

Which lora did you use? In my case, it was better than 7b or 13b for most of the questions.

I just used the instructions in the README which point to https://huggingface.co/Pi3141/alpaca-30B-ggml

Without knowing how the file was converted, it's hard to determine the cause. I followed this method exactly.

I've heard that the quantization in llama.cpp has been modified to improve the answer quality issue, but I can't confirm this until a ggml file compatible with the current llama.cpp is released.

I just used the instructions in the README which point to https://huggingface.co/Pi3141/alpaca-30B-ggml

Hello! I made that alpaca 30B file, several people have used it and we all agree it gives better results. Can you tell me what your parameters were?

Without knowing how the file was converted, it's hard to determine the cause. I followed this method exactly.

I made that file. I followed the same instructions as you, just used 30B instead of 13B and modified some variables to make it work.

I've heard that the quantization in llama.cpp has been modified to improve the answer quality issue, but I can't confirm this until a ggml file compatible with the current llama.cpp is released.

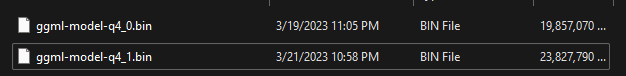

I will take a look at the new quantization method, I believe it creates a file that ends with q4_1.bin instead of q4_0.bin, is that right? I'll see if I can update the alpaca models to use the new method. You can probably expect the new 30B model within the next 24-ish hours.

30B wouldn't run for me. It gave me an error saying that "tok_embeddings.weight" was the wrong size. It was something like 1664 when it should've been 6566 https://huggingface.co/Pi3141/alpaca-30B-ggml

I have the same problem, what is your ram size?

30B wouldn't run for me. It gave me an error saying that "tok_embeddings.weight" was the wrong size.

I have the same problem, what is your ram size?

It's not a problem with RAM, but if you want to know, I have 32GB RAM, Alpaca 30B uses 20GB. The problem was in the main.cpp file, or for alpaca.cpp, the chat.cpp file. I made a PR earlier today that was merged, it should've fixed it. Try resyncing the repository and running make/cmake again.

I just used the instructions in the README which point to https://huggingface.co/Pi3141/alpaca-30B-ggml

Hello! I made that alpaca 30B file, several people have used it and we all agree it gives better results. Can you tell me what your parameters were?

I didn't specify any parameters, just used ./chat -m; are there any parameters you'd recommend?

30B wouldn't run for me. It gave me an error saying that "tok_embeddings.weight" was the wrong size.

I have the same problem, what is your ram size?

It's not a problem with RAM, but if you want to know, I have 32GB RAM, Alpaca 30B uses 20GB. The problem was in the main.cpp file, or for alpaca.cpp, the chat.cpp file. I made a PR earlier today that was merged, it should've fixed it. Try resyncing the repository and running make/cmake again.

Sorry I am a noob, so should I just open PowerShell in the release folder, and then type: make/cmake ? thanks in advance!

Sorry I am a noob, so should I just open PowerShell in the release folder

You'll need to pull or re-clone the repository first, if you haven't done so already. Then build again according to the instructions in the readme file.

and then type: make/cmake ?

The command is not make/cmake I meant make or cmake. Depends on your OS. On windows, you will have to install cmake and run the following:

cd alpaca.cpp

mkdir build

cd build

cmake ..

cmake --build . --config Release

On Linux and MacOS:

cd alpaca.cpp

make chat

The 30B model produces much worse results. It's also a lot slower.

Example for the prompt

What's the difference between alpacas and lamas?:7B:

The main differences are in their breeds, dietary requirements (lactating animals require more nutrition), temperament/personality traits (alpha males tend to be dominant while alpha females can have a calmer demeanor) and social structure. Alpacas live together as herds with one male leader called an alphaherd, whereas llamas are usually kept in pairs or small groups of three-to-five animals per owner/herdsman (called lactation units).30B:

AlphaCas are small, lightweight robots designed for use in education or research settings while Lama is an open source robotics platform that can be used to build a variety^C<- I killed it after several minutes because it was excruciatingly slow.

I disagree. This is what I got (i fixed your typo on "llama"):

What's the difference between alpacas and llamas?

The main differences between Alpacas and Llamas are their size, fur type/colour, and temperament. While both species come from South America (Alpaca in Peru) they differ quite a bit physically as well as behaviourally. The Alpaca is much smaller than the llama with an average height of around two feet compared to six or more for llamas. They also tend to have longer and softer fur that comes in many different colours while Llamas are generally single coloured brown, black & white and grey - though their coats can be long it is not as fine as Alpaca wool which makes the latter quite popular among craftsmen! Finally they differ behaviourally; llamas tend to have a more aggressive temperament whereas alpacas are often considered friendlier.

If i included your typo and used "lama" instead:

What's the difference between alpacas and lamas?

The main difference between alpacas and llamas is their size, as alpaca are smaller than llama. Alpacas also tend to have finer fleece compared with llamas which has a coarser coat. Additionally, the color of an alpaca's fur tends to be more varied than that of a lamas'.

My settings are:

- temp: 0.9

- top_k: 420

- top_p: 0.9

- repeat_last_n: 64

- repeat_penalty: 1.3

temp: 0.9

top_k: 420

top_p: 0.9

repeat_last_n: 64

repeat_penalty: 1.3

I'm using these params and I'm getting fairly short responses too. (using your 30B).

sampling parameters: temp = 0.900000, top_k = 420, top_p = 0.900000, repeat_last_n = 64, repeat_penalty = 1.300000

> What's the difference between alpacas and llamas?

Alpacas are members of the camelid family, while Llamas belong to the same genus but a different species (Vicugna). Alpaca is an indigenous South American livestock breed originating in Peru.

To be fair - the smaller alpacas would usually give me shortish answers so it could be a param issue somewhere else.

I'm using these params and I'm getting fairly short responses too. (using your 30B).

sampling parameters: temp = 0.900000, top_k = 420, top_p = 0.900000, repeat_last_n = 64, repeat_penalty = 1.300000

> What's the difference between alpacas and llamas?

Alpacas are members of the camelid family, while Llamas belong to the same genus but a different species (Vicugna). Alpaca is an indigenous South American livestock breed originating in Peru.To be fair - the smaller alpacas would usually give me shortish answers so it could be a param issue somewhere else.

Try this prompt template:

Below is an instruction that describes a task. Write a response that appropriately completes the request.

### Instruction:

put your instruction or question here

### Response:

I've heard that the quantization in llama.cpp has been modified to improve the answer quality issue, but I can't confirm this until a ggml file compatible with the current llama.cpp is released.

I will take a look at the new quantization method, I believe it creates a file that ends with q4_1.bin instead of q4_0.bin, is that right? I'll see if I can update the alpaca models to use the new method. You can probably expect the new 30B model within the next 24-ish hours.

Update: I have made a ggml model with the new quantization, but it seems to be broken.

Here is a file size comparison:

The q4_1 model is broken because from my initial testing, it will only generate a series of "u u u u u u u u u"

I don't even know if the new quantization method is even complete because it's not mentioned in the quantize.py file, only in the quantize.cpp file. If anyone has any ideas about why this is happening, please let me know. Thank you.

cd alpaca.cpp

Wow thanks! I reinstalled and it worked! I am getting 2 words/sec, my CPU is AMD ryzen 9-5900x. is it normal speed?