clearml

clearml copied to clipboard

clearml copied to clipboard

Log fitted "hyper parameters" when possible

Heya!

Right now, ClearML automagically logs the configuration for models it recognizes, but not all parameters of interest are logged.

For example, we occasionally use a linear regression model in our flow, and it would be nice if ClearML also e.g. logged the coef_ and intercept_ parameters (fitted hyper parameters).

Same goes for other models where that are various coefficients that could be exposed.

Hi @idantene So far you can log those parameters in the Experiment/Configuration part - but I guess that you would prefer them into the model part. Do you explicitly need those data to be logged automagically ? Or would an API function that permits to log them would satisfy your need - you could then choose the data you want to log, and connect it by invoking a function, the way you connect task parameters ? We could think of something like : task.model.connect(parameter) where parameter={"coef":skmodel.coef_, ...}

Hey @DavidNativ,

Sorry, I forgot to reply to this!

The automagical logging is what I looking for. We can of course customize the code and connect the configuration/objects as needed, but it would require us to consider the different models and add custom code for each.

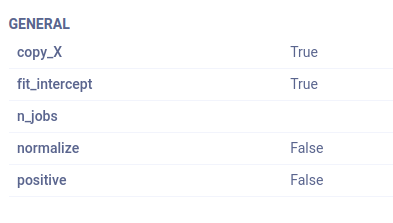

It seems ClearML does this "out of the box" for e.g. XGBoost, LightGBM, etc, and even for sklearn it logs the following:

So I was hoping it could be extended to support this additional, informative attributes.

So I was hoping it could be extended to support this additional, informative attributes.

Hi @idantene,

Now it's our turn to answer slowly :wink: Care to share with us a minimal example with these parameters? Tried running our sklearn and xgboost examples and no hyperparams were fetched automagically. If you can share something that logs 1-2 params you'd like us to automagically capture that'll also be awesome!

I want to make sure I get what parameters you want captured so we can figure out what does it take for us to capture them.

Hey @erezalg,

They are captured automatically; see the code or the docs, and also e.g. Catboost.

I do see that this does not exist for XGBoost, and I could not find supporting code for scikit-learn generally, which would explain the missing hyperparameters.

Maybe this issue can be generalized to add support for more hyperparameter automagical logging whenever possible?

@idantene,

Whenever possible is a bit ambitious but we'll do our best :wink: I think our Catboost and LightGBM were done at a later stage so we had a bit more insight about what can we achieve with these integrations.

I'll update here if I need some more info, or when it's ready.