tfvaegan

tfvaegan copied to clipboard

tfvaegan copied to clipboard

About the loss in the training process

Hello, I observed the loss function in the training process, and found that the total loss function did not approach 0 at last, but stabilized at a fixed value with a small amplitude oscillation. Is this normal? If so, why didn't the value of the loss continue to decrease? Hope you can answer! (I used the relevant parameters of the FLO data set you provided for the test.)

Hi @Sakura-rain, Thank you for your interest in our work. Sorry for the late response. Is your issue solved?

Hi @Sakura-rain, Thank you for your interest in our work. Sorry for the late response. Is your issue solved?

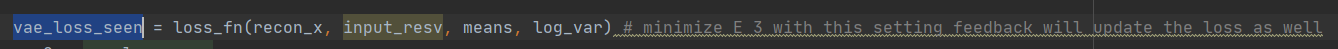

Thank you very much for your reply. I'm sorry that the problem still exists. I used the Settings in the Script folder to experiment, but the value of vae_loss_seen stopped falling when it dropped to a certain value (for example, in the Flo dataset, it have stopped at around 300). This value would normally approach zero as the experiment progresses, but despite this, the final prediction is similar to the one presented in your article. I am not sure whether my experiment is correct or not. I hope you can give me an answer.

Hi @Sakura-rain Are you able to replicate the numbers?