Exploding memory during refinement

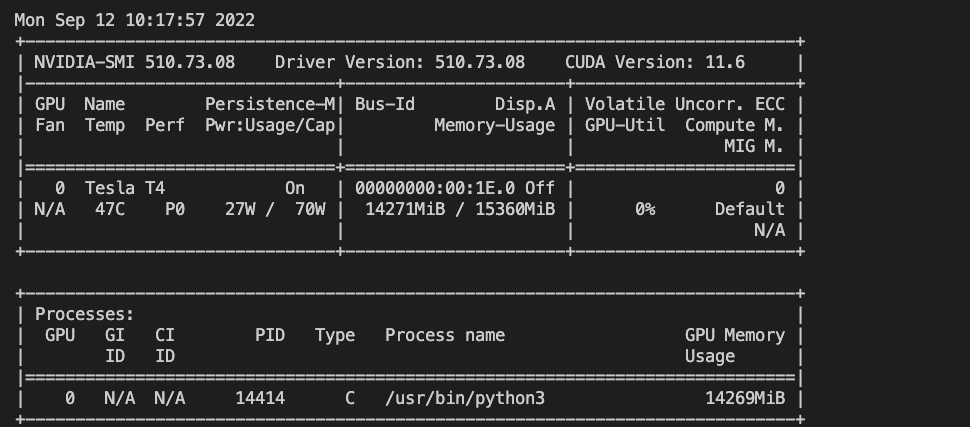

Hi. Im trying to execute the inpainting process with refinement on a regular image 1024X1024. I've noticed that a memory consumption is very high during the refinement. It swells a bit more after each forward_rear/ forward_front pass and it's not freed until the end of the process. Is it normal? Is there any solution to mitigate this?

+1

+1

@ankuPRK

Yes, the refinement process is time and memory intensive. It occupies around 24GB VRAM, until all the iterations are completed. That's because we aren't just doing inference, but rather multiple forward-backward passes. I'll look into if there's a memory leak later in the day, but it does need around 24GB VRAM to run effectively.

@ankuPRK , Can you please recommend some AWS EC2 instance if we need to do just predictions only with refine=True Parameter.

Sure, any instance with a total GPU VRAM >= 24GB i.e. GPU Mem (GiB) >= 24GB should work:

https://aws.amazon.com/ec2/instance-types/

Some of the ones which should work: p3.8xlarge, p2.8xlarge, g5.xlarge, etc

Thanks @ankuPRK . Few questions please

- Does this VRAM is GPU Ram ?

- p3.8x and p2.8x have multiple GPU. I think we need only single GPU , Why multiple ?

- Does these GPU's are cuda supported ? Thanks

Hi @hamzanaeem1999,

- Yes, the VRAM is GPU RAM / GPU Memory

- The refinement step utilizes multiple GPUs to get total memory > 24GB. If you can get an instance with a single GPU having >24GB memory, that would also work.

- I think they do have CUDA support, but not super sure. You can just start with trying to follow the installation steps given in this repo.

Thanks @ankuPRK