ChatGLM2-6B

ChatGLM2-6B copied to clipboard

ChatGLM2-6B copied to clipboard

全参数精调 [launch.py:315:sigkill_handler] Killing subprocess

Is there an existing issue for this?

- [X] I have searched the existing issues

Current Behavior

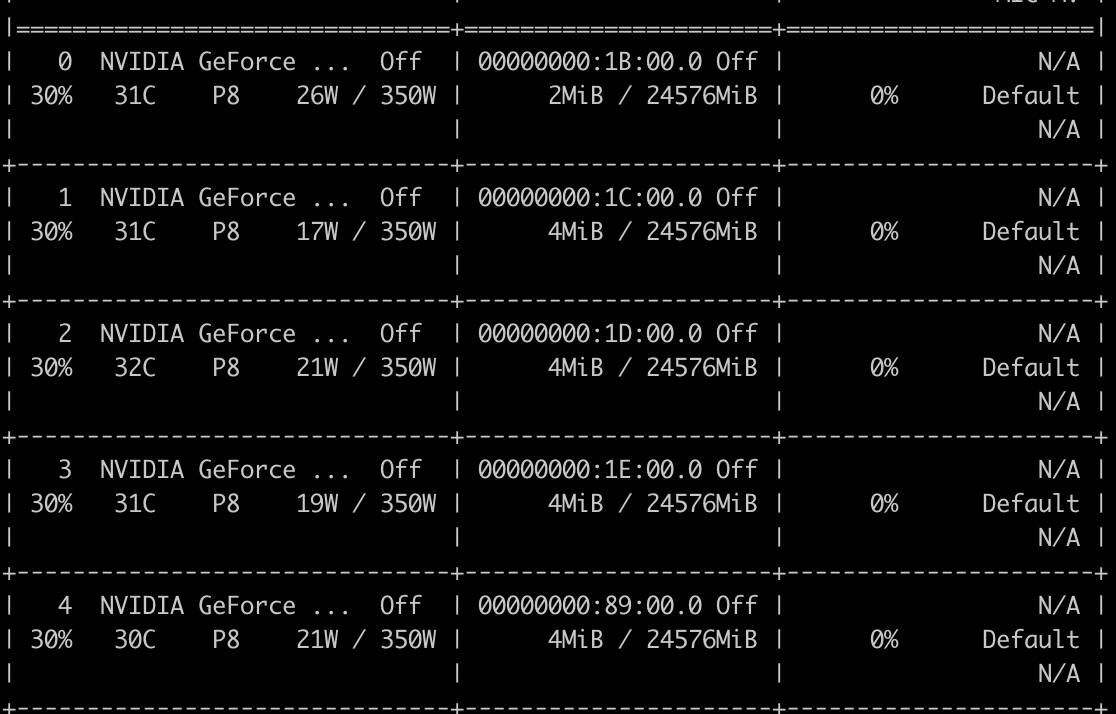

7张24G的显卡

Expected Behavior

No response

Steps To Reproduce

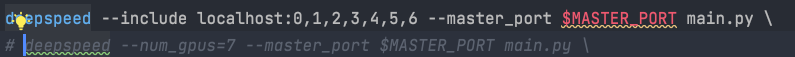

启动脚本:

Environment

- OS:Ubuntu 18.04.6 LTS

- Python:3.10.9

- Transformers:4.30.2

- PyTorch:2.0.0

- CUDA Support (`python -c "import torch; print(torch.cuda.is_available())"`) : True

Anything else?

No response

你是CUDA out of memory,我把--fp16 改成:

--pre_seq_len 128

--quantization_bit 4

就可以了,但是感觉没有分布式加速,指定了4张gpu,每张卡的现存占用都是一样的,感觉每个gpu都在重复计算,并没有加速

Is there an existing issue for this?

- [x] I have searched the existing issues

Current Behavior

7张24G的显卡

Expected Behavior

No response

Steps To Reproduce

启动脚本:

Environment

- OS:Ubuntu 18.04.6 LTS - Python:3.10.9 - Transformers:4.30.2 - PyTorch:2.0.0 - CUDA Support (`python -c "import torch; print(torch.cuda.is_available())"`) : TrueAnything else?

No response

你好,请问这个问题有解决吗?

没有搞定 @xlhuang132

全参数咋调的,求助