ChatGLM-6B

ChatGLM-6B copied to clipboard

ChatGLM-6B copied to clipboard

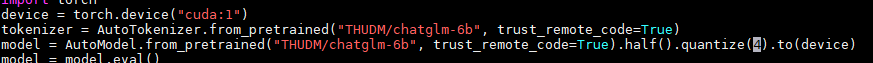

为什么量化后指定不了哪一块儿显卡,运行还是占用第0块儿

Is your feature request related to a problem? Please describe.

Solutions

1

Additional context

No response

try this, add them to the top of your code

import os os.environ['CUDA_VISIBLE_DEVICES'] = '1'

try this, add them to the top of your code

import os os.environ['CUDA_VISIBLE_DEVICES'] = '1'

Is there any way to run the model on 2 gpus?

import os os.environ['CUDA_VISIBLE_DEVICES'] = '1,2'

@zxcvbn114514

try this, add them to the top of your code import os os.environ['CUDA_VISIBLE_DEVICES'] = '1'

Is there any way to run the model on 2 gpus?

import os os.environ['CUDA_VISIBLE_DEVICES'] = '1,2'

@zxcvbn114514

try this, add them to the top of your code import os os.environ['CUDA_VISIBLE_DEVICES'] = '1'

Is there any way to run the model on 2 gpus?

It's not working.The model simply runs at the second gpu after I add the code.