ChatGLM-6B

ChatGLM-6B copied to clipboard

ChatGLM-6B copied to clipboard

[BUG/Help] <title>量化失败?

Is there an existing issue for this?

- [X] I have searched the existing issues

Current Behavior

启动时可以正常打开网页,但是好像量化模型失败?

C:\Users\oo\langchain-ChatGLM>python webui.py

Explicitly passing a revision is encouraged when loading a model with custom code to ensure no malicious code has been contributed in a newer revision.

Explicitly passing a revision is encouraged when loading a configuration with custom code to ensure no malicious code has been contributed in a newer revision.

Explicitly passing a revision is encouraged when loading a model with custom code to ensure no malicious code has been contributed in a newer revision.

No compiled kernel found.

Compiling kernels : C:\Users\oo.cache\huggingface\modules\transformers_modules\chatglm-6b-int4\quantization_kernels_parallel.c

Compiling gcc -O3 -fPIC -pthread -fopenmp -std=c99 C:\Users\oo.cache\huggingface\modules\transformers_modules\chatglm-6b-int4\quantization_kernels_parallel.c -shared -o C:\Users\oo.cache\huggingface\modules\transformers_modules\chatglm-6b-int4\quantization_kernels_parallel.so

c:/mingw/bin/../lib/gcc/mingw32/6.3.0/../../../../mingw32/bin/ld.exe: cannot find -lpthread

collect2.exe: error: ld returned 1 exit status

Compile failed, using default cpu kernel code.

Compiling gcc -O3 -fPIC -std=c99 C:\Users\oo.cache\huggingface\modules\transformers_modules\chatglm-6b-int4\quantization_kernels.c -shared -o C:\Users\oo.cache\huggingface\modules\transformers_modules\chatglm-6b-int4\quantization_kernels.so

Kernels compiled : C:\Users\oo.cache\huggingface\modules\transformers_modules\chatglm-6b-int4\quantization_kernels.so

Cannot load cpu kernel, don't use quantized model on cpu.

Using quantization cache

Applying quantization to glm layers

No sentence-transformers model found with name M:\model\text2vec-large-chinese. Creating a new one with MEAN pooling.

No sentence-transformers model found with name M:\model\text2vec-large-chinese. Creating a new one with MEAN pooling.

Expected Behavior

No response

Steps To Reproduce

gcc无法编译quantization_kernels_parallel

Environment

- OS:Windows10 64位

- Python:3.10.9

- Transformers:

- PyTorch:

- CUDA Support (`python -c "import torch; print(torch.cuda.is_available())"`) :

Anything else?

No response

你手动运行一下 ctypes.cdll.LoadLibrary("C:\Users\oo.cache\huggingface\modules\transformers_modules\chatglm-6b-int4\quantization_kernels.so") 看一下会报什么错?

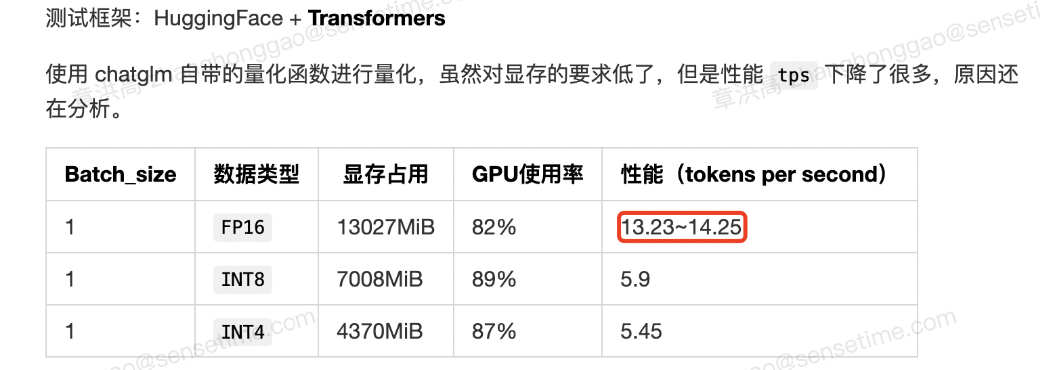

我是量化完后运行速度更慢了怎么回事。