FastDeploy

FastDeploy copied to clipboard

FastDeploy copied to clipboard

ocr fastdeploy部署gpu服务端报错:failed to load all models

温馨提示:根据社区不完全统计,按照模板提问,可以加快回复和解决问题的速度

环境

- 【FastDeploy版本】: docker image: registry.baidubce.com/paddlepaddle/fastdeploy:1.0.4-gpu-cuda11.4-trt8.5-21.10

- 【系统平台】: Linux x64(Ubuntu 18.04)

- 【硬件】: Nvidia GPU , CUDA 12.0 参考https://github.com/PaddlePaddle/FastDeploy/tree/develop/examples/vision/ocr/PP-OCR/serving/fastdeploy_serving

问题日志及出现问题的操作流程

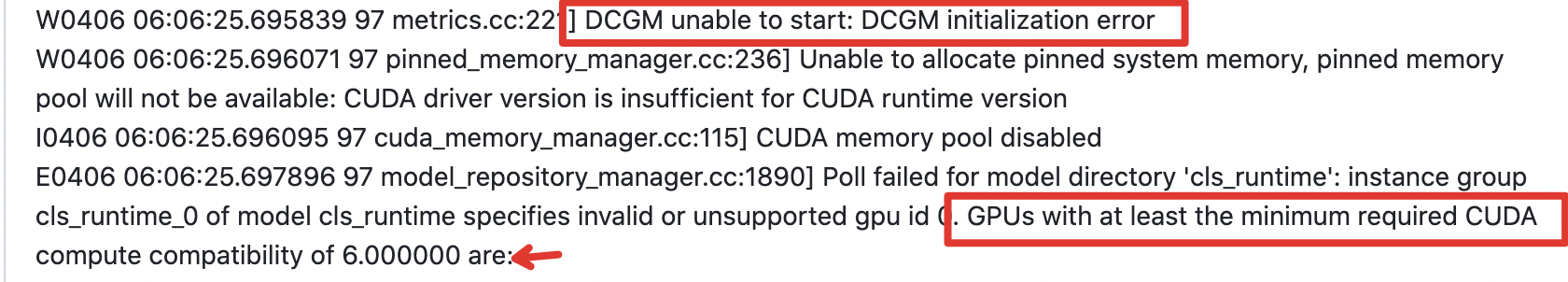

执行fastdeployserver --model-repository=/ocr_serving/models 启动服务时出错 ,日志如下: Error: Failed to initialize NVML W0406 06:06:25.695839 97 metrics.cc:221] DCGM unable to start: DCGM initialization error W0406 06:06:25.696071 97 pinned_memory_manager.cc:236] Unable to allocate pinned system memory, pinned memory pool will not be available: CUDA driver version is insufficient for CUDA runtime version I0406 06:06:25.696095 97 cuda_memory_manager.cc:115] CUDA memory pool disabled E0406 06:06:25.697896 97 model_repository_manager.cc:1890] Poll failed for model directory 'cls_runtime': instance group cls_runtime_0 of model cls_runtime specifies invalid or unsupported gpu id 0. GPUs with at least the minimum required CUDA compute compatibility of 6.000000 are: E0406 06:06:25.699168 97 model_repository_manager.cc:1890] Poll failed for model directory 'det_runtime': instance group det_runtime_0 of model det_runtime specifies invalid or unsupported gpu id 0. GPUs with at least the minimum required CUDA compute compatibility of 6.000000 are: E0406 06:06:25.700564 97 model_repository_manager.cc:1890] Poll failed for model directory 'rec_runtime': instance group rec_runtime_0 of model rec_runtime specifies invalid or unsupported gpu id 0. GPUs with at least the minimum required CUDA compute compatibility of 6.000000 are: E0406 06:06:25.700604 97 model_repository_manager.cc:1375] Invalid argument: ensemble rec_pp contains models that are not available: rec_runtime E0406 06:06:25.700613 97 model_repository_manager.cc:1375] Invalid argument: ensemble pp_ocr contains models that are not available: det_runtime E0406 06:06:25.700619 97 model_repository_manager.cc:1375] Invalid argument: ensemble cls_pp contains models that are not available: cls_runtime I0406 06:06:25.700740 97 model_repository_manager.cc:1022] loading: cls_postprocess:1 I0406 06:06:25.802928 97 model_repository_manager.cc:1022] loading: det_postprocess:1 I0406 06:06:25.812457 97 python.cc:1875] TRITONBACKEND_ModelInstanceInitialize: cls_postprocess_0 (CPU device 0) I0406 06:06:25.903565 97 model_repository_manager.cc:1022] loading: det_preprocess:1 I0406 06:06:26.004031 97 model_repository_manager.cc:1022] loading: rec_postprocess:1 model_config: {'name': 'cls_postprocess', 'platform': '', 'backend': 'python', 'version_policy': {'latest': {'num_versions': 1}}, 'max_batch_size': 128, 'input': [{'name': 'POST_INPUT_0', 'data_type': 'TYPE_FP32', 'format': 'FORMAT_NONE', 'dims': [2], 'is_shape_tensor': False, 'allow_ragged_batch': False}], 'output': [{'name': 'POST_OUTPUT_0', 'data_type': 'TYPE_INT32', 'dims': [1], 'label_filename': '', 'is_shape_tensor': False}, {'name': 'POST_OUTPUT_1', 'data_type': 'TYPE_FP32', 'dims': [1], 'label_filename': '', 'is_shape_tensor': False}], 'batch_input': [], 'batch_output': [], 'optimization': {'priority': 'PRIORITY_DEFAULT', 'input_pinned_memory': {'enable': True}, 'output_pinned_memory': {'enable': True}, 'gather_kernel_buffer_threshold': 0, 'eager_batching': False}, 'instance_group': [{'name': 'cls_postprocess_0', 'kind': 'KIND_CPU', 'count': 1, 'gpus': [], 'secondary_devices': [], 'profile': [], 'passive': False, 'host_policy': ''}], 'default_model_filename': '', 'cc_model_filenames': {}, 'metric_tags': {}, 'parameters': {}, 'model_warmup': []} postprocess input names: ['POST_INPUT_0'] postprocess output names: ['POST_OUTPUT_0', 'POST_OUTPUT_1'] I0406 06:06:26.082822 97 model_repository_manager.cc:1183] successfully loaded 'cls_postprocess' version 1 I0406 06:06:26.083348 97 python.cc:1875] TRITONBACKEND_ModelInstanceInitialize: det_postprocess_0 (CPU device 0) model_config: {'name': 'det_postprocess', 'platform': '', 'backend': 'python', 'version_policy': {'latest': {'num_versions': 1}}, 'max_batch_size': 128, 'input': [{'name': 'POST_INPUT_0', 'data_type': 'TYPE_FP32', 'format': 'FORMAT_NONE', 'dims': [1, -1, -1], 'is_shape_tensor': False, 'allow_ragged_batch': False}, {'name': 'POST_INPUT_1', 'data_type': 'TYPE_INT32', 'format': 'FORMAT_NONE', 'dims': [4], 'is_shape_tensor': False, 'allow_ragged_batch': False}, {'name': 'ORI_IMG', 'data_type': 'TYPE_UINT8', 'format': 'FORMAT_NONE', 'dims': [-1, -1, 3], 'is_shape_tensor': False, 'allow_ragged_batch': False}], 'output': [{'name': 'POST_OUTPUT_0', 'data_type': 'TYPE_STRING', 'dims': [-1, 1], 'label_filename': '', 'is_shape_tensor': False}, {'name': 'POST_OUTPUT_1', 'data_type': 'TYPE_FP32', 'dims': [-1, 1], 'label_filename': '', 'is_shape_tensor': False}, {'name': 'POST_OUTPUT_2', 'data_type': 'TYPE_FP32', 'dims': [-1, -1, 1], 'label_filename': '', 'is_shape_tensor': False}], 'batch_input': [], 'batch_output': [], 'optimization': {'priority': 'PRIORITY_DEFAULT', 'input_pinned_memory': {'enable': True}, 'output_pinned_memory': {'enable': True}, 'gather_kernel_buffer_threshold': 0, 'eager_batching': False}, 'instance_group': [{'name': 'det_postprocess_0', 'kind': 'KIND_CPU', 'count': 1, 'gpus': [], 'secondary_devices': [], 'profile': [], 'passive': False, 'host_policy': ''}], 'default_model_filename': '', 'cc_model_filenames': {}, 'metric_tags': {}, 'parameters': {}, 'model_warmup': []} postprocess input names: ['POST_INPUT_0', 'POST_INPUT_1', 'ORI_IMG'] postprocess output names: ['POST_OUTPUT_0', 'POST_OUTPUT_1', 'POST_OUTPUT_2'] I0406 06:06:26.329773 97 python.cc:1875] TRITONBACKEND_ModelInstanceInitialize: det_preprocess_0 (CPU device 0) I0406 06:06:26.329891 97 model_repository_manager.cc:1183] successfully loaded 'det_postprocess' version 1 model_config: {'name': 'det_preprocess', 'platform': '', 'backend': 'python', 'version_policy': {'latest': {'num_versions': 1}}, 'max_batch_size': 1, 'input': [{'name': 'INPUT_0', 'data_type': 'TYPE_UINT8', 'format': 'FORMAT_NONE', 'dims': [-1, -1, 3], 'is_shape_tensor': False, 'allow_ragged_batch': False}], 'output': [{'name': 'OUTPUT_0', 'data_type': 'TYPE_FP32', 'dims': [3, -1, -1], 'label_filename': '', 'is_shape_tensor': False}, {'name': 'OUTPUT_1', 'data_type': 'TYPE_INT32', 'dims': [4], 'label_filename': '', 'is_shape_tensor': False}], 'batch_input': [], 'batch_output': [], 'optimization': {'priority': 'PRIORITY_DEFAULT', 'input_pinned_memory': {'enable': True}, 'output_pinned_memory': {'enable': True}, 'gather_kernel_buffer_threshold': 0, 'eager_batching': False}, 'instance_group': [{'name': 'det_preprocess_0', 'kind': 'KIND_CPU', 'count': 1, 'gpus': [], 'secondary_devices': [], 'profile': [], 'passive': False, 'host_policy': ''}], 'default_model_filename': '', 'cc_model_filenames': {}, 'metric_tags': {}, 'parameters': {}, 'model_warmup': []} preprocess input names: ['INPUT_0'] preprocess output names: ['OUTPUT_0', 'OUTPUT_1'] I0406 06:06:26.601789 97 model_repository_manager.cc:1183] successfully loaded 'det_preprocess' version 1 I0406 06:06:26.602037 97 python.cc:1875] TRITONBACKEND_ModelInstanceInitialize: rec_postprocess_0 (CPU device 0) model_config: {'name': 'rec_postprocess', 'platform': '', 'backend': 'python', 'version_policy': {'latest': {'num_versions': 1}}, 'max_batch_size': 128, 'input': [{'name': 'POST_INPUT_0', 'data_type': 'TYPE_FP32', 'format': 'FORMAT_NONE', 'dims': [-1, 6625], 'is_shape_tensor': False, 'allow_ragged_batch': False}], 'output': [{'name': 'POST_OUTPUT_0', 'data_type': 'TYPE_STRING', 'dims': [1], 'label_filename': '', 'is_shape_tensor': False}, {'name': 'POST_OUTPUT_1', 'data_type': 'TYPE_FP32', 'dims': [1], 'label_filename': '', 'is_shape_tensor': False}], 'batch_input': [], 'batch_output': [], 'optimization': {'priority': 'PRIORITY_DEFAULT', 'input_pinned_memory': {'enable': True}, 'output_pinned_memory': {'enable': True}, 'gather_kernel_buffer_threshold': 0, 'eager_batching': False}, 'instance_group': [{'name': 'rec_postprocess_0', 'kind': 'KIND_CPU', 'count': 1, 'gpus': [], 'secondary_devices': [], 'profile': [], 'passive': False, 'host_policy': ''}], 'default_model_filename': '', 'cc_model_filenames': {}, 'metric_tags': {}, 'parameters': {}, 'model_warmup': []} postprocess input names: ['POST_INPUT_0'] postprocess output names: ['POST_OUTPUT_0', 'POST_OUTPUT_1'] I0406 06:06:26.882847 97 model_repository_manager.cc:1183] successfully loaded 'rec_postprocess' version 1 I0406 06:06:26.882963 97 server.cc:522] +------------------+------+ | Repository Agent | Path | +------------------+------+ +------------------+------+

I0406 06:06:26.883003 97 server.cc:549] +---------+-------------------------------------------------------+--------+ | Backend | Path | Config | +---------+-------------------------------------------------------+--------+ | python | /opt/tritonserver/backends/python/libtriton_python.so | {} | +---------+-------------------------------------------------------+--------+

I0406 06:06:26.883037 97 server.cc:592] +-----------------+---------+--------+ | Model | Version | Status | +-----------------+---------+--------+ | cls_postprocess | 1 | READY | | det_postprocess | 1 | READY | | det_preprocess | 1 | READY | | rec_postprocess | 1 | READY | +-----------------+---------+--------+

I0406 06:06:26.883137 97 tritonserver.cc:1920] +----------------------------------+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ | Option | Value | +----------------------------------+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ | server_id | triton | | server_version | 2.15.0 | | server_extensions | classification sequence model_repository model_repository(unload_dependents) schedule_policy model_configuration system_shared_memory cuda_shared_memory binary_tensor_data statistics | | model_repository_path[0] | /ocr_serving/models | | model_control_mode | MODE_NONE | | strict_model_config | 1 | | rate_limit | OFF | | pinned_memory_pool_byte_size | 268435456 | | response_cache_byte_size | 0 | | min_supported_compute_capability | 6.0 | | strict_readiness | 1 | | exit_timeout | 30 | +----------------------------------+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

I0406 06:06:26.883193 97 server.cc:252] Waiting for in-flight requests to complete. I0406 06:06:26.883202 97 model_repository_manager.cc:1055] unloading: rec_postprocess:1 I0406 06:06:26.883252 97 model_repository_manager.cc:1055] unloading: det_preprocess:1 I0406 06:06:26.883298 97 model_repository_manager.cc:1055] unloading: det_postprocess:1 I0406 06:06:26.883367 97 model_repository_manager.cc:1055] unloading: cls_postprocess:1 I0406 06:06:26.883393 97 server.cc:267] Timeout 30: Found 4 live models and 0 in-flight non-inference requests I0406 06:06:27.883475 97 server.cc:267] Timeout 29: Found 4 live models and 0 in-flight non-inference requests Cleaning up... Cleaning up... Cleaning up... Cleaning up... I0406 06:06:27.944011 97 model_repository_manager.cc:1166] successfully unloaded 'det_postprocess' version 1 I0406 06:06:27.945687 97 model_repository_manager.cc:1166] successfully unloaded 'cls_postprocess' version 1 I0406 06:06:27.945913 97 model_repository_manager.cc:1166] successfully unloaded 'rec_postprocess' version 1 I0406 06:06:27.946568 97 model_repository_manager.cc:1166] successfully unloaded 'det_preprocess' version 1 I0406 06:06:28.883892 97 server.cc:267] Timeout 28: Found 0 live models and 0 in-flight non-inference requests error: creating server: Internal - failed to load all models

目前还没有在CUDA 12.0的host上测试过,你再容器内运行nvidia-smi是正常的吗?

看起来是驱动版本跟DCGM版本不匹配。或卡太差了不支持,要sm 6.0以上的卡才行

相同的问题,请问解决了吗??

如果是容器运行的话,docker run 带上--gpus all参数