azure-search-openai-demo

azure-search-openai-demo copied to clipboard

azure-search-openai-demo copied to clipboard

Support for controlling errors related to the maximum token size of the chat model.

Please provide us with the following information:

This issue is for a: (mark with an x)

- [ ] bug report -> please search issues before submitting

- [x] feature request

- [ ] documentation issue or request

- [ ] regression (a behavior that used to work and stopped in a new release)

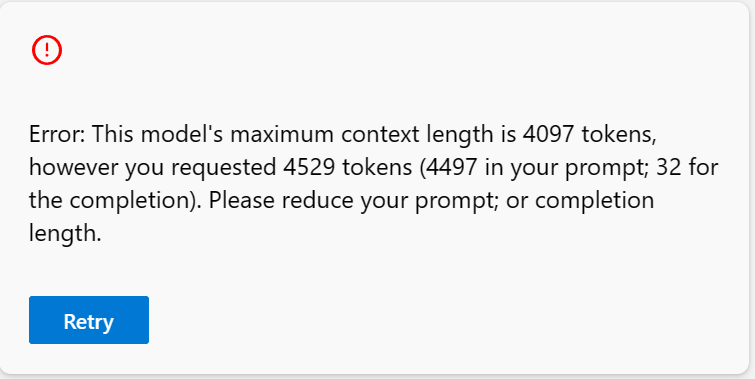

If it is anticipated that the content of the conversation will exceed the maximum token size of GPT-3.5-turbo, which is 4097, instead of displaying an error message for this limit, it would be better to create a code that shortens the content of the conversation previously conducted so that the conversation can continue within the maximum token size.

Especially, it would be helpful to separate the data displayed on the screen from the actual data, so that even if the content of the previous conversation is erased on the screen, the previous conversation can still be displayed on the screen.

Minimal steps to reproduce

Any log messages given by the failure

Expected/desired behavior

OS and Version?

Windows 7, 8 or 10. Linux (which distribution). macOS (Yosemite? El Capitan? Sierra?)

Versions

Mention any other details that might be useful

Thanks! We'll be in touch soon.

This issue is stale because it has been open 60 days with no activity. Remove stale label or comment or this issue will be closed.