clickhouse-backup

clickhouse-backup copied to clipboard

clickhouse-backup copied to clipboard

cos upload parts must greater than 1mb

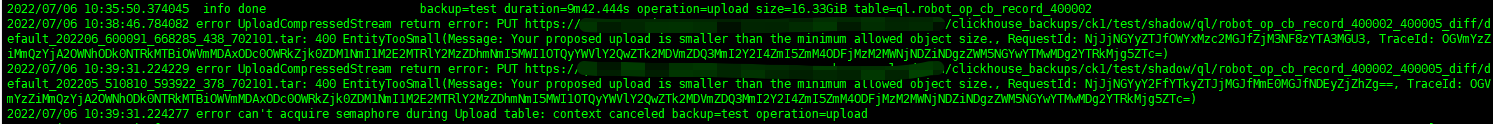

When I used create_remote option to backup my data, I got an error: 400 EntityTooSmall(Message: Your proposed upload is smaller than the minimum allowed object size.

Then I checked the document on Tencent cloud, and it said upload part must greater than 1mb, unless the upload part is the last part.

debug msg:

HTTP/1.1 400 Bad Request

Content-Length: 635

Connection: keep-alive

Content-Type: application/xml

Date: Wed, 06 Jul 2022 04:17:32 GMT

Server: tencent-cos

X-Cos-Request-Id: NjJjNTBjM2FfMTEzNTJjMGJfMmJjZTdfNDFiNTUyZg==

X-Cos-Trace-Id: OGVmYzZiMmQzYjA2OWNhODk0NTRkMTBiOWVmMDAxODc0OWRkZjk0ZDM1NmI1M2E2MTRlY2MzZDhmNmI5MWI1OTQyYWVlY2QwZTk2MDVmZDQ3MmI2Y2I4ZmI5ZmM4ODFjMzM2MWNjNDZiNDgzZWM5NGYwYTMwMDg2YTRkMjg5ZTc=

2022/07/06 12:17:32.378729 error UploadCompressedStream return error: PUT https://xxxxxxxx.cos.ap-guangzhou.myqcloud.com/clickhouse_backups/ck1/test1/shadow/ql/robot_op_cb_record_400002_400005_diff/default_202205_510810_593922_378_702335.tar: 400 EntityTooSmall(Message: Your proposed upload is smaller than the minimum allowed object size., RequestId: NjJjNTBjM2FfMTEzNTJjMGJfMmJjZTdfNDFiNTUyZg==, TraceId: OGVmYzZiMmQzYjA2OWNhODk0NTRkMTBiOWVmMDAxODc0OWRkZjk0ZDM1NmI1M2E2MTRlY2MzZDhmNmI5MWI1OTQyYWVlY2QwZTk2MDVmZDQ3MmI2Y2I4ZmI5ZmM4ODFjMzM2MWNjNDZiNDgzZWM5NGYwYTMwMDg2YTRkMjg5ZTc=)

Only the one thing I can suggest for workaround now use old upload \ download method

add following settings to /etc/clickhouse-backup/config.yml

general:

upload_by_part: false

download_by_part: false

Only the one thing I can suggest for workaround now use old upload \ download method

add following settings to

/etc/clickhouse-backup/config.ymlgeneral: upload_by_part: false download_by_part: false

it's not working.

@michael-liumh what exaclty not working? could you share results for the following command?

LOG_LEVEL=debug COS_DEBUG=1 clickhouse-backup upload <backup_name>

@michael-liumh what exaclty not working? could you share results for the following command?

LOG_LEVEL=debug COS_DEBUG=1 clickhouse-backup upload <backup_name>

got same error: 400 EntityTooSmall(Message: Your proposed upload is smaller than the minimum allowed object size

2022/07/08 11:08:29.946042 info SELECT value FROM `system`.`build_options` where name='VERSION_INTEGER'

2022/07/08 11:08:29.948835 info SELECT * FROM system.disks;

2022/07/08 11:08:29.953361 info SELECT toInt64(max(data_by_disk) * 1.02) AS max_file_size FROM (SELECT disk_name, max(toInt64(bytes_on_disk)) data_by_disk FROM system.parts GROUP BY disk_name)

2022/07/08 11:08:29.971176 info SELECT count() AS is_macros_exists FROM system.tables WHERE database='system' AND table='macros'

HEAD / HTTP/1.1

Host: test-ops-1234567890.cos.ap-guangzhou.myqcloud.com

Authorization: q-sign-algorithm=sha1&q-ak=aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa&q-sign-time=1657249709;1657253309&q-key-time=1657249709;1657253309&q-header-list=host&q-url-param-list=&q-signature=e0e2402d96f6b1cb545d96288105ae7567deecd5

User-Agent: cos-go-sdk-v5/0.7.30

HTTP/1.1 200 OK

Connection: keep-alive

Content-Type: application/xml

Date: Fri, 08 Jul 2022 03:08:30 GMT

Server: tencent-cos

X-Cos-Bucket-Region: ap-guangzhou

X-Cos-Request-Id: NjJjNzlmYWRfNGMxMzc2MGJfODQwM18zZGEzZjRj

Content-Length: 0

2022/07/08 11:08:30.077562 debug /tmp/.clickhouse-backup-metadata.cache.COS load 0 elements

GET /?delimiter=%2F&prefix=clickhouse_backups%2Fck1%2F HTTP/1.1

Host: test-ops-1234567890.cos.ap-guangzhou.myqcloud.com

Authorization: q-sign-algorithm=sha1&q-ak=aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa&q-sign-time=1657249710;1657253310&q-key-time=1657249710;1657253310&q-header-list=host&q-url-param-list=delimiter;prefix&q-signature=2d61a3d2dec7256805248ef6d9bba7db0097b845

User-Agent: cos-go-sdk-v5/0.7.30

HTTP/1.1 200 OK

Content-Length: 252

Connection: keep-alive

Content-Type: application/xml

Date: Fri, 08 Jul 2022 03:08:30 GMT

Server: tencent-cos

X-Cos-Bucket-Region: ap-guangzhou

X-Cos-Request-Id: NjJjNzlmYWVfNGMxMzc2MGJfODNmOF8zZGIxYjZm

2022/07/08 11:08:30.114395 debug /tmp/.clickhouse-backup-metadata.cache.COS save 0 elements

2022/07/08 11:08:30.114737 debug prepare table concurrent semaphore with concurrency=4 len(tablesForUpload)=1 backup=test11 operation=upload

2022/07/08 11:08:30.115895 debug start uploadTableData ql.robot_op_cb_record_400002_400005_diff with concurrency=4 len(table.Parts[...])=33

2022/07/08 11:08:30.121229 debug start upload 1649 files to test11/shadow/ql/robot_op_cb_record_400002_400005_diff/default_1.tar

GET /clickhouse_backups%2Fck1%2Ftest11%2Fshadow%2Fql%2Frobot_op_cb_record_400002_400005_diff%2Fdefault_1.tar HTTP/1.1

Host: test-ops-1234567890.cos.ap-guangzhou.myqcloud.com

Authorization: q-sign-algorithm=sha1&q-ak=aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa&q-sign-time=1657249710;1657253310&q-key-time=1657249710;1657253310&q-header-list=host&q-url-param-list=&q-signature=f8a462ba704bd8a380da9ee39eeb3e156929b2ae

User-Agent: cos-go-sdk-v5/0.7.30

HTTP/1.1 404 Not Found

Content-Length: 558

Connection: keep-alive

Content-Type: application/xml

Date: Fri, 08 Jul 2022 03:08:30 GMT

Server: tencent-cos

X-Cos-Request-Id: NjJjNzlmYWVfNGMxMzc2MGJfODQyNl8zZGE3NDdi

0 / 18520543972 [-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------] 0.00%PUT /clickhouse_backups%2Fck1%2Ftest11%2Fshadow%2Fql%2Frobot_op_cb_record_400002_400005_diff%2Fdefault_1.tar HTTP/1.1

Host: test-ops-1234567890.cos.ap-guangzhou.myqcloud.com

Authorization: q-sign-algorithm=sha1&q-ak=aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa&q-sign-time=1657249710;1657253310&q-key-time=1657249710;1657253310&q-header-list=host&q-url-param-list=&q-signature=5aa7f89d150bb2c5ef5fa3162faa9cfff3b846f1

User-Agent: cos-go-sdk-v5/0.7.30

17.25 GiB / 17.25 GiB [===================================================================================================================================================================================] 100.00%HTTP/1.1 400 Bad Request

Content-Length: 599

Connection: keep-alive

Content-Type: application/xml

Date: Fri, 08 Jul 2022 03:14:51 GMT

Server: tencent-cos

X-Cos-Request-Id: NjJjNzlmYWVfNGMxMzc2MGJfODNmOF8zZGIxYjcy

X-Cos-Trace-Id: OGVmYzZiMmQzYjA2OWNhODk0NTRkMTBiOWVmMDAxODc0OWRkZjk0ZDM1NmI1M2E2MTRlY2MzZDhmNmI5MWI1OTQyYWVlY2QwZTk2MDVmZDQ3MmI2Y2I4ZmI5ZmM4ODFjMzM2MWNjNDZiNDgzZWM5NGYwYTMwMDg2YTRkMjg5ZTc=

2022/07/08 11:14:51.057115 error UploadCompressedStream return error: PUT https://test-ops-1234567890.cos.ap-guangzhou.myqcloud.com/clickhouse_backups/ck1/test11/shadow/ql/robot_op_cb_record_400002_400005_diff/default_1.tar: 400 EntityTooSmall(Message: Your proposed upload is smaller than the minimum allowed object size., RequestId: NjJjNzlmYWVfNGMxMzc2MGJfODNmOF8zZGIxYjcy, TraceId: OGVmYzZiMmQzYjA2OWNhODk0NTRkMTBiOWVmMDAxODc0OWRkZjk0ZDM1NmI1M2E2MTRlY2MzZDhmNmI5MWI1OTQyYWVlY2QwZTk2MDVmZDQ3MmI2Y2I4ZmI5ZmM4ODFjMzM2MWNjNDZiNDgzZWM5NGYwYTMwMDg2YTRkMjg5ZTc=)

2022/07/08 11:14:51.057199 error one of upload go-routine return error: one of uploadTableData go-routine return error: can't upload: PUT https://test-ops-1234567890.cos.ap-guangzhou.myqcloud.com/clickhouse_backups/ck1/test11/shadow/ql/robot_op_cb_record_400002_400005_diff/default_1.tar: 400 EntityTooSmall(Message: Your proposed upload is smaller than the minimum allowed object size., RequestId: NjJjNzlmYWVfNGMxMzc2MGJfODNmOF8zZGIxYjcy, TraceId: OGVmYzZiMmQzYjA2OWNhODk0NTRkMTBiOWVmMDAxODc0OWRkZjk0ZDM1NmI1M2E2MTRlY2MzZDhmNmI5MWI1OTQyYWVlY2QwZTk2MDVmZDQ3MmI2Y2I4ZmI5ZmM4ODFjMzM2MWNjNDZiNDgzZWM5NGYwYTMwMDg2YTRkMjg5ZTc=)

my config

general:

remote_storage: cos

max_file_size: 0

disable_progress_bar: false

backups_to_keep_local: 1

backups_to_keep_remote: 7

log_level: info

allow_empty_backups: false

download_concurrency: 1

upload_concurrency: 1

restore_schema_on_cluster: ""

upload_by_part: false

download_by_part: false

clickhouse:

username: default

password: "aaaaaaaa"

host: localhost

port: 9000

disk_mapping: {}

skip_tables:

- system.*

- INFORMATION_SCHEMA.*

- information_schema.*

timeout: 30m

freeze_by_part: false

freeze_by_part_where: ""

secure: false

skip_verify: false

sync_replicated_tables: false

log_sql_queries: true

config_dir: /etc/clickhouse-server/

restart_command: systemctl restart clickhouse-server

ignore_not_exists_error_during_freeze: true

tls_key: ""

tls_cert: ""

tls_ca: ""

debug: false

cos:

url: "https://test-ops-1234567890.cos.ap-guangzhou.myqcloud.com"

timeout: 24h

secret_id: "aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa"

secret_key: "bbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbb"

path: "clickhouse_backups/ck1/"

compression_format: tar

compression_level: 1

debug: false

you can use s3 for workaround:

s3:

access_key: "<access_key>"

secret_key: "<secret_key>"

bucket: "<bucket>"

endpoint: "https://cos.<region>.myqcloud.com"

region: "<region>"

force_path_style: true

path: "clickhouse-backups"

compression_level: 1

compression_format: tar

concurrency: 1

part_size: 16777216

max_parts_count: 10000

allow_multipart_download: false

debug: false

you can use s3 for workaround:

s3: access_key: "<access_key>" secret_key: "<secret_key>" bucket: "<bucket>" endpoint: "https://cos.<region>.myqcloud.com" region: "<region>" force_path_style: true path: "clickhouse-backups" compression_level: 1 compression_format: tar concurrency: 1 part_size: 16777216 max_parts_count: 10000 allow_multipart_download: false debug: false

thanks, it worked.

Unfortunately, Upload instead of Put useless cause don't allow use stream compressing during upload ;(