stable-diffusion-webui-docker

stable-diffusion-webui-docker copied to clipboard

stable-diffusion-webui-docker copied to clipboard

Failed to start docker compose --profile auto up --build get error " Found no NVIDIA driver on your system."

I have good nvidia-smi in the docker container. But get this error.

import torch

torch.cuda.is_available() False

Did the cuda lib in the docker? Is there any env should be set?

Thanks

=> CACHED [stage-2 10/15] RUN apt-get -y install libgoogle-perftools-dev && apt-get clean 0.0s => CACHED [stage-2 11/15] RUN --mount=type=cache,target=/root/.cache/pip <<EOF (cd stable-diffusion-webui...) 0.0s => CACHED [stage-2 12/15] RUN --mount=type=cache,target=/root/.cache/pip pip install -U opencv-python-headless 0.0s => CACHED [stage-2 13/15] COPY . /docker 0.0s => CACHED [stage-2 14/15] RUN <<EOF (python3 /docker/info.py /stable-diffusion-webui/modules/ui.py...) 0.0s => CACHED [stage-2 15/15] WORKDIR /stable-diffusion-webui 0.0s => exporting to image 0.7s => => exporting layers 0.0s => => writing image sha256:ac812f88a1753b07b7683b0a12823a38517e368a92bcff789cbd2ca80d367775 0.1s => => naming to docker.io/library/sd-auto:47 0.0s [+] Running 1/0 ⠿ Container webui-docker-auto-1 Created 0.0s Attaching to webui-docker-auto-1 webui-docker-auto-1 | Mounted .cache webui-docker-auto-1 | Mounted LDSR webui-docker-auto-1 | Mounted BLIP webui-docker-auto-1 | Mounted Hypernetworks webui-docker-auto-1 | Mounted VAE webui-docker-auto-1 | Mounted GFPGAN webui-docker-auto-1 | Mounted RealESRGAN webui-docker-auto-1 | Mounted Deepdanbooru webui-docker-auto-1 | Mounted ScuNET webui-docker-auto-1 | Mounted .cache webui-docker-auto-1 | Mounted StableDiffusion webui-docker-auto-1 | Mounted embeddings webui-docker-auto-1 | Mounted ESRGAN webui-docker-auto-1 | Mounted config.json webui-docker-auto-1 | Mounted SwinIR webui-docker-auto-1 | Mounted Lora webui-docker-auto-1 | Mounted MiDaS webui-docker-auto-1 | Mounted ui-config.json webui-docker-auto-1 | Mounted BSRGAN webui-docker-auto-1 | Mounted Codeformer webui-docker-auto-1 | Mounted extensions webui-docker-auto-1 | + python -u webui.py --listen --port 7860 --allow-code --medvram --xformers --enable-insecure-extension-access --api webui-docker-auto-1 | Warning: caught exception 'Found no NVIDIA driver on your system. Please check that you have an NVIDIA GPU and installed a driver from http://www.nvidia.com/Download/index.aspx', memory monitor disabled webui-docker-auto-1 | Removing empty folder: /stable-diffusion-webui/models/BSRGAN webui-docker-auto-1 | Loading weights [cc6cb27103] from /stable-diffusion-webui/models/Stable-diffusion/v1-5-pruned-emaonly.ckpt webui-docker-auto-1 | Creating model from config: /stable-diffusion-webui/configs/v1-inference.yaml webui-docker-auto-1 | LatentDiffusion: Running in eps-prediction mode webui-docker-auto-1 | DiffusionWrapper has 859.52 M params. webui-docker-auto-1 | loading stable diffusion model: RuntimeError webui-docker-auto-1 | Traceback (most recent call last): webui-docker-auto-1 | File "/stable-diffusion-webui/webui.py", line 111, in initialize webui-docker-auto-1 | modules.sd_models.load_model() webui-docker-auto-1 | File "/stable-diffusion-webui/modules/sd_models.py", line 421, in load_model webui-docker-auto-1 | sd_hijack.model_hijack.hijack(sd_model) webui-docker-auto-1 | File "/stable-diffusion-webui/modules/sd_hijack.py", line 160, in hijack webui-docker-auto-1 | self.optimization_method = apply_optimizations() webui-docker-auto-1 | File "/stable-diffusion-webui/modules/sd_hijack.py", line 40, in apply_optimizations webui-docker-auto-1 | if cmd_opts.force_enable_xformers or (cmd_opts.xformers and shared.xformers_available and torch.version.cuda and (6, 0) <= torch.cuda.get_device_capability(shared.device) <= (9, 0)): webui-docker-auto-1 | File "/usr/local/lib/python3.10/site-packages/torch/cuda/init.py", line 357, in get_device_capability webui-docker-auto-1 | prop = get_device_properties(device) webui-docker-auto-1 | File "/usr/local/lib/python3.10/site-packages/torch/cuda/init.py", line 371, in get_device_properties webui-docker-auto-1 | _lazy_init() # will define _get_device_properties webui-docker-auto-1 | File "/usr/local/lib/python3.10/site-packages/torch/cuda/init.py", line 229, in _lazy_init webui-docker-auto-1 | torch._C._cuda_init() webui-docker-auto-1 | RuntimeError: Found no NVIDIA driver on your system. Please check that you have an NVIDIA GPU and installed a driver from http://www.nvidia.com/Download/index.aspx webui-docker-auto-1 | webui-docker-auto-1 | webui-docker-auto-1 | Stable diffusion model failed to load, exiting webui-docker-auto-1 exited with code 1

what is the output of nvidia-smi?

what is the output of

nvidia-smi?

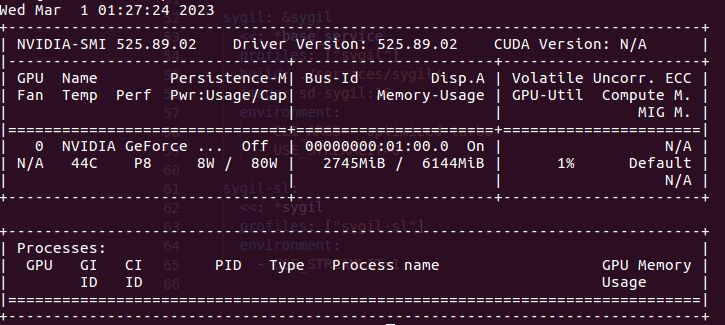

Here is the output of nvidia-smi in the container

Follow https://github.com/NVIDIA/nvidia-docker/issues/533#issuecomment-344425678

Add environment variable NVIDIA_DRIVER_CAPABILITIES=compute,utility and NVIDIA_VISIBLE_DEVICES=all to container can resolv this problem

Follow NVIDIA/nvidia-docker#533 (comment) Add environment variable

NVIDIA_DRIVER_CAPABILITIES=compute,utilityandNVIDIA_VISIBLE_DEVICES=allto container can resolv this problem

Great, thanks, after I add the env, it could works now.

Although, I still don't understant why this image need this settings. And which cuda so file was loaded in the container(I didn't find the cuda lib in the image...).